Part 1- Deploy Synapse Apache Spark Pool and install PyPI packages

Azure Synapse Analytics provides powerful Apache Spark pools for big data processing and machine learning workloads. While creating these resources manually through the Azure portal is straightforward, infrastructure as code (IaC) approaches like Bicep provide significant benefits for repeatability, version control, and automation.

🚀 In this post, I’ll walk through how to use Bicep to deploy a Spark pool to an existing Synapse workspace and manage its Python packages.

Prerequisites

Before we begin, make sure you have:

- An Azure subscription.

- An existing Azure Synapse Analytics workspace.

- Azure CLI installed with the latest version.

- Basic familiarity with Bicep templates.

Synapse Spark Pools Package Management

Spark pools in Azure Synapse provide a serverless Spark environment for big data processing. Some key points to understand:

- Spark pools are billed based on vCore-hours used.

- You need package from public registry which is not available on Azure Synapse Runtime for Apache Spark default packages.

- They support various node sizes and auto-scaling configurations.

- Custom libraries can be installed at the pool level or session level. The change of session-level libraries isn’t persisted between sessions.

- Library management is crucial for maintaining consistency across environments

Libraries provide reusable code that you might want to include in your programs or projects for Apache Spark in Azure Synapse Analytics (Azure Synapse Spark).

You might need to update your serverless Apache Spark pool environment for various reasons. For example, you might find that:

- One of your core dependencies released a new version.

- You need an extra package for training your machine learning model or preparing your data.

- A better package is available, and you no longer need the older package.

- Your team has built a custom Python

.whlpackage that you need available in your Apache Spark pool.

There are two primary ways to install a library on a Spark pool:

- Install a workspace library that’s been uploaded as a workspace package.

- To update Python libraries, provide a

requirements.txtor Condaenvironment.ymlenvironment specification file to install packages from repositories like PyPI or Conda-Forge.

Next I’ll show you how to manage Python libraries that your Sparkpool loads from PyPI using the requirements.txt and Bicep template.

Spark Pool Bicep Template

Below is a snippet from a Bicep template that deploys a Spark pool to an existing Synapse workspace and configures what PyPI libraries it will have available for developer (you can find the complete templates at the end of the post).

// For brevity, only the parameters relevant to library requirements are included.

param workspaceName string

param name string

// library requirements - by default loads the content from requirements.txt in the same folder.

param libraryRequirements object = {

filename: 'requirements.txt'

content: loadTextContent('./requirements.txt')

}

// Existing parent workspace resource

resource workspace 'Microsoft.Synapse/workspaces@2021-06-01' existing = {

name: workspaceName

}

// Create Apache Spark Pool

resource bigDataPool 'Microsoft.Synapse/workspaces/bigDataPools@2021-06-01' = {

name: name

parent: workspace

location: resourceGroup().location

properties: {

libraryRequirements: libraryRequirements

// Other required properties omitted for brevity

}

}

modules/synapse/big-data-pools/main.bicep

This template creates a Spark pool with auto-scaling enabled and a few common Python libraries specified directly in the template.

External Requirements File

The bicep modules/synapse/big-data-pools/main.bicep module expects that the you provide the libraries reuirements as requirements.txt file in the same folder.

It has a parameter libraryRequirements to accept list of packages to be loaded and by default it will uses Bicep function loadTextContentto load requirements from the external file.

param libraryRequirements object = {

filename: 'requirements.txt'

content: loadTextContent('./requirements.txt')

}

The requirements file contains the libraries to be loaded in package/version key/value pairs for example:

polars==1.23.0

pandas==2.2.3

snowflake-connector-python==3.14.0

This approach keeps your Bicep template cleaner and makes it easier to manage library versions separately.

Deploying the template

Run in powershell session following az cli command in the same folder as the module template:

az deployment group create `

-g "<your existing resource group name>" `

-f "main.bicep" `

--parameters `

"synapseWorkspaceName=<your existing workspace name>" `

"sparkPoolName=<your spark pool name>"

or with bash shell:

az deployment group create \

-g "<your existing resource group name>" \

-f "main.bicep" \

--parameters \

"synapseWorkspaceName=<your existing workspace name>" \

"sparkPoolName=<your spark pool name>"

❗NOTE: When adding or removing PyPI packages the deployment might take a long time. Deployment will trigger a system job to install/unstall and cache the specified libraries. This process helps reduce overall session startup time.

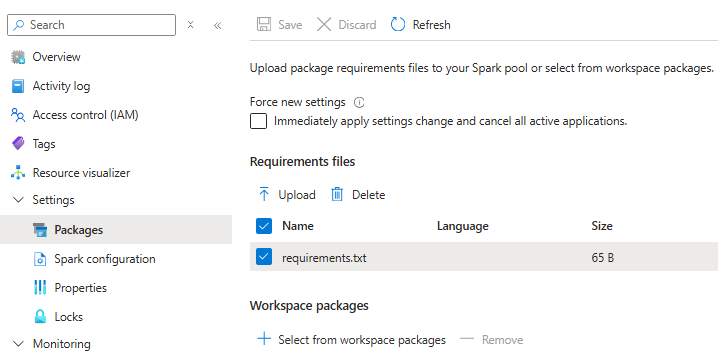

After deployment you should see in Azure Portal the requirements.txt file deployed in the Packages section of your Azure Spark Pool.

References

- Create a new serverless Apache Spark pool using the Azure portal

- Create a serverless Apache Spark pool using Synapse Studio

- Manage libraries for Apache Spark in Azure Synapse Analytics

- Manage Sparkpool Packages

- Bicep documentation

- Synapse Spark pool REST API reference

- Apache Spark documentation

modules/synapse/big-data-pools/main.bicep

This module deploys Azure Synapse Apache Sparkpool to an existing Azure Synapse Workspace. It assumes that there is a requirements.txt file in the same folder that contains all the PyPI libraries that needs to be installed to the pool.

metadata name = 'Synapse Analytics Apache Spark Pools'

metadata description = 'This module deploys an Apache Spark Pool for existing Azure Synapse Workspace.'

metadata owner = 'cloud-center-of-excellence/bicep-module-owners'

@description('Required. The name of the existing parent Synapse Workspace.')

param workspaceName string

// General

@minLength(3)

@maxLength(15)

@description('Required. Name of the Azure Spark Pool. Valid characters: Alphanumerics. Max length of 15 characters.')

param name string

@description('Optional. The geo-location where the resource lives.')

param location string = resourceGroup().location

@description('Optional. Tags of the resource.')

param tags object = {}

// AutoPauseProperties

@description('Optional. Number of minutes of idle time before the Big Data pool is automatically paused.')

param autoPauseDelayInMinutes int = 15

@description('Optional. Whether the Big Data pool is auto-paused.')

param autoPauseEnabled bool = true

// AutoScaleProperties

@description('Optional. Whether the Big Data pool is auto-scaled.')

param autoScaleEnabled bool = true

@minValue(3)

@maxValue(200)

@description('Optional. The maximum number of nodes the Big Data pool can have.')

param autoScaleMaxNodeCount int = 3

@minValue(3)

@maxValue(200)

@description('Optional. The minimum number of nodes the Big Data pool can have.')

param autoScaleMinNodeCount int = 3

// CustomLibraries (custom .whl or .jar files)

@description('Optional. List of custom libraries/packages associated with the spark pool.')

param customLibraries array = []

@description('Optional. The default folder where Spark logs will be written. Default value is "spark-logs".')

param defaultSparkLogFolder string = 'spark-logs'

@description('Optional. Whether autotune is enabled.')

param isAutotuneEnabled bool = true

@description('Optional. Whether compute isolation is enabled.')

param isComputeIsolationEnabled bool = false

@description('Optional. The library requirements. Default loads the content from requirements.txt file at the same folder as this module.')

param libraryRequirements object = {

filename: 'requirements.txt'

content: loadTextContent('./requirements.txt')

}

@minValue(3)

@maxValue(200)

@description('Optional. The number of nodes in the Big Data pool. Defaults to 3.')

param nodeCount int = 3

@allowed([

'Large'

'Medium'

'None'

'Small'

'XLarge'

'XXLarge'

'XXXLarge'

])

@description('Optional. The level of compute power that each node in the Big Data pool has.')

param nodeSize string = 'Small'

@allowed([

'HardwareAcceleratedFPGA'

'HardwareAcceleratedGPU'

'MemoryOptimized'

])

@description('Optional. The kind of nodes that the Big Data pool provides.')

param nodeSizeFamily string = 'MemoryOptimized'

@description('Optional. Whether session level packages enabled.')

param sessionLevelPackagesEnabled bool = true

@description('Optional. Whether dynamic executor allocation is enabled.')

param dynamicExecutorAllocationEnabled bool = true

@description('Optional. The maximum number of executors the Big Data pool can have. This value must be less than the maximum number of autoScaleMaxNodeCount.')

param dynamicExecutorAllocationMaxExecutors int = autoScaleMaxNodeCount - 1

@description('Optional. The minimum number of executors the Big Data pool can have.')

param dynamicExecutorAllocationMinExecutors int = 1

// SparkConfigProperties

@description('Optional. Spark configuration file to specify additional properties.')

param sparkConfigProperties object = {}

@description('Optional. The Spark events folder.')

param sparkEventsFolder string = 'spark-events'

@allowed([

'3.4'

])

@description('Optional. The version of Spark. Default value is 3.4.')

param sparkVersion string = '3.4'

// Existing parent resource

resource workspace 'Microsoft.Synapse/workspaces@2021-06-01' existing = {

name: workspaceName

}

// Create Apache Spark Pool

resource bigDataPool 'Microsoft.Synapse/workspaces/bigDataPools@2021-06-01' = {

name: name

location: location

tags: tags

parent: workspace

properties: {

autoPause: {

delayInMinutes: autoPauseDelayInMinutes

enabled: autoPauseEnabled

}

autoScale: {

enabled: autoScaleEnabled

maxNodeCount: autoScaleMaxNodeCount

minNodeCount: autoScaleMinNodeCount

}

customLibraries: customLibraries

defaultSparkLogFolder: defaultSparkLogFolder

dynamicExecutorAllocation: {

enabled: dynamicExecutorAllocationEnabled

maxExecutors: dynamicExecutorAllocationMaxExecutors

minExecutors: dynamicExecutorAllocationMinExecutors

}

isAutotuneEnabled: isAutotuneEnabled

isComputeIsolationEnabled: isComputeIsolationEnabled

libraryRequirements: libraryRequirements

nodeCount: nodeCount

nodeSize: nodeSize

nodeSizeFamily: nodeSizeFamily

sessionLevelPackagesEnabled: sessionLevelPackagesEnabled

sparkConfigProperties: sparkConfigProperties

sparkEventsFolder: sparkEventsFolder

sparkVersion: sparkVersion

}

}

output sparkPoolName string = bigDataPool.name

output sparkPoolId string = bigDataPool.id

modules/synapse/big-data-pools/main.bicep